DiET-GS and DiET-GS++

Diffusion Prior and Event Stream-Assisted

Motion Deblurring 3D Gaussian Splatting

Event stream

Blur images + 3DGS

DiET-GS++ (ours)

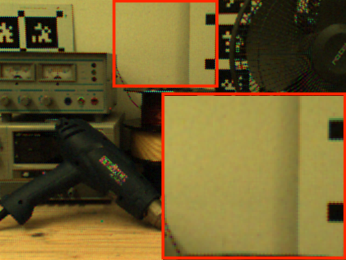

Overall framework of DiET-GS. Stage 1 (DiET-GS) optimizes the deblurring 3DGS with leveraging the event streams and diffusion prior. To preserve accurate color and clean details, we exploit EDI prior in multiple ways, including color supervision $C$, guidance for fine-grained details $I$ and additional regularization $\tilde{I}$ via EDI simulation. Stage 2 (DiET-GS++) is then employed to maximize the effect of diffusion prior with introducing extra learnable parameters $\mathbf{f}_{\mathbf{g}}$. DiEt-GS++ further refines the rendered images from DiET-GS, effectively enhancing rich edge features. More details are explained in Sec. 4.1 and Sec. 4.2. of the main paper.

Quantitative comparisons on novel-view synthetis with both synthetic and real-world dataset. The results are the average of every scenes within the dataset. The best results are in bold while the second best results are underscored. Our DiET-GS significantly outperforms existing baselines in PSNR, SSIM and LPIPS while our DiET-GS++ achieves the best scores in NR-IQA metrics such as MUSIQ and CLIP-IQA.

Quantitative comparisons on single image deblurring with real-world datasets. Our DiET-GS++ consistently outperforms all baselines in every 5 real-world scenes.

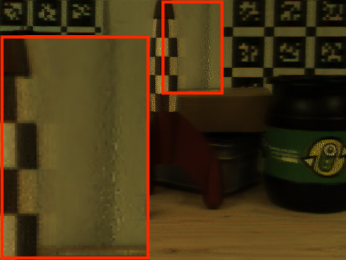

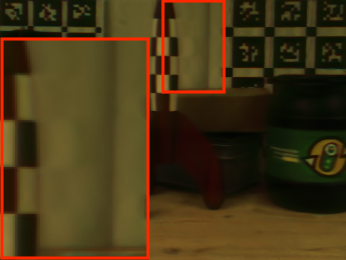

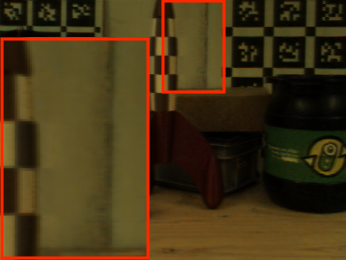

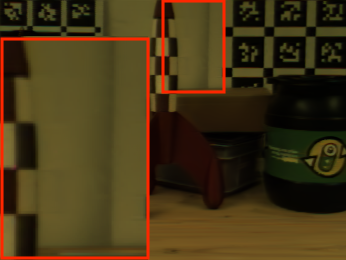

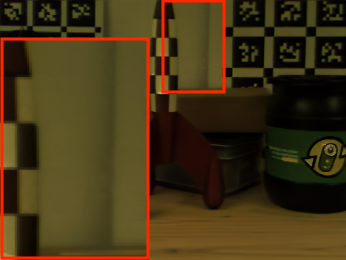

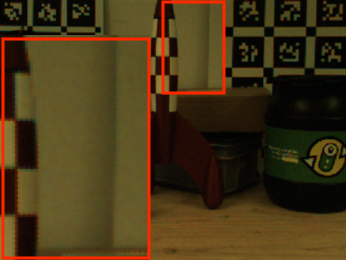

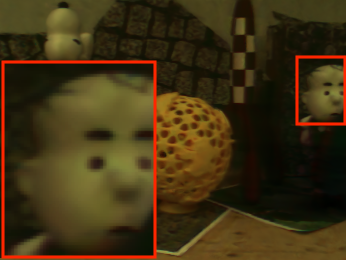

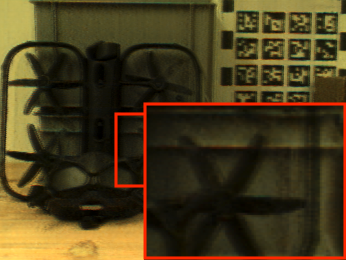

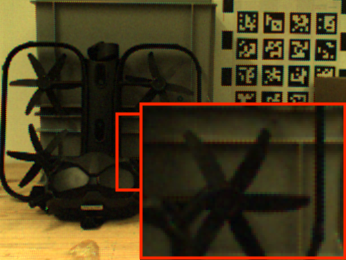

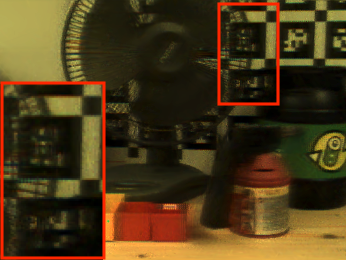

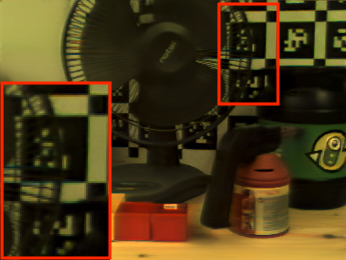

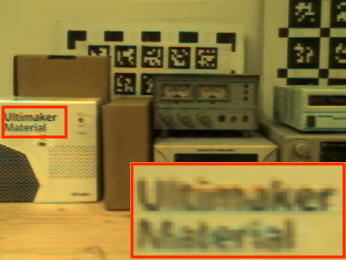

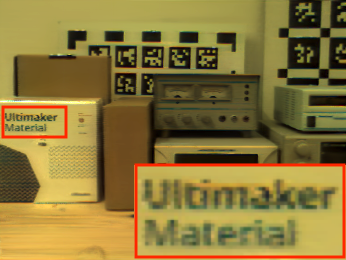

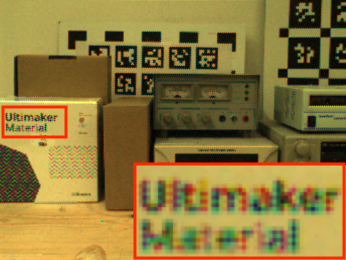

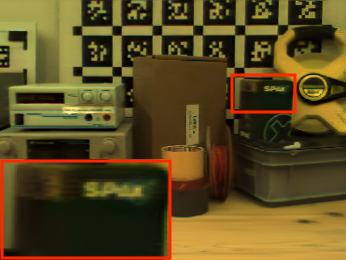

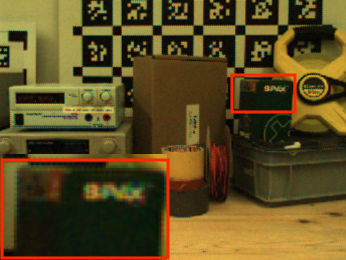

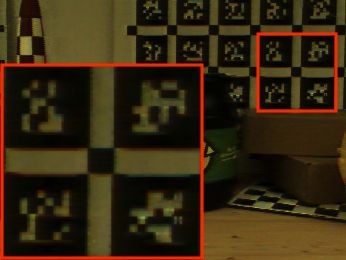

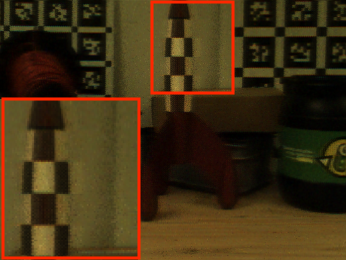

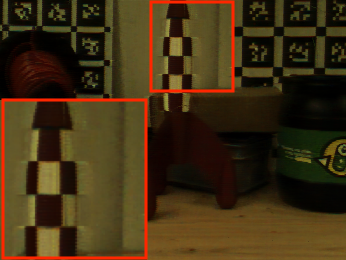

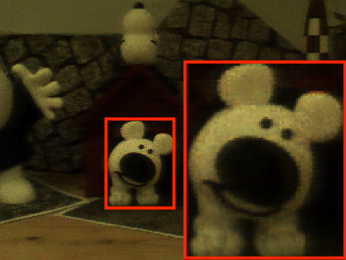

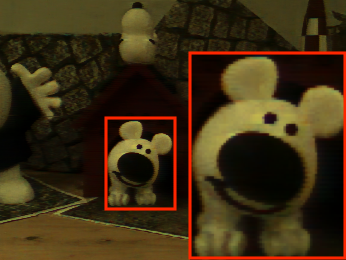

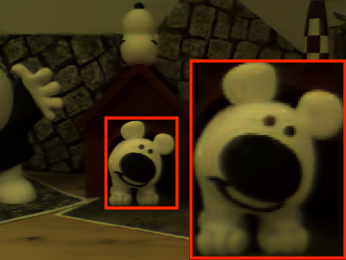

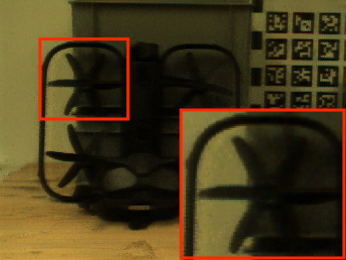

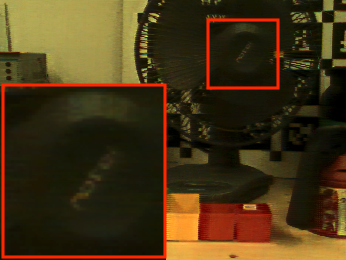

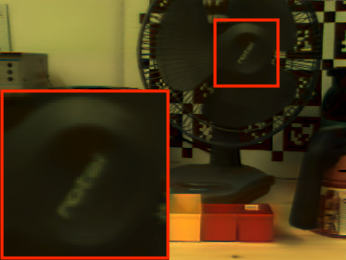

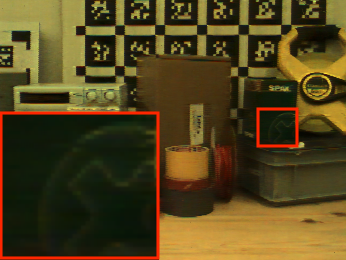

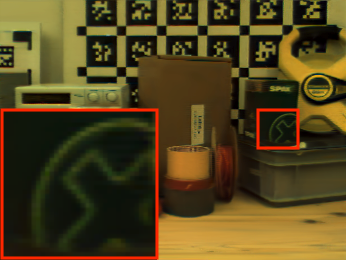

DiET-GS shows cleaner texture with more accurate details compared to the event-based baselines while DiET-GS++ further enhances these features with sharper definition, achieving the best visual quality.

Blurry Image

EDI+GS

E2NeRF

Ev-DeblurNeRF

DiET-GS (Ours)

DiET-GS++ (Ours)

GT

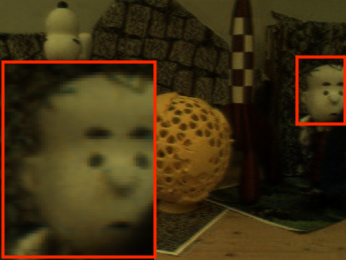

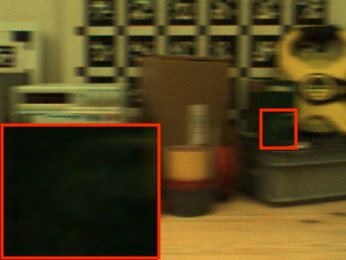

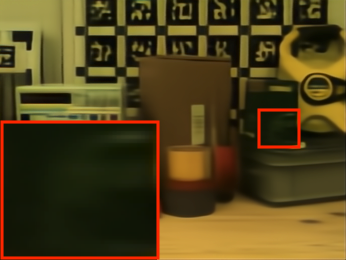

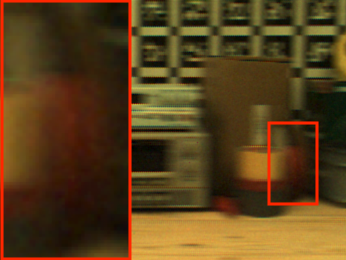

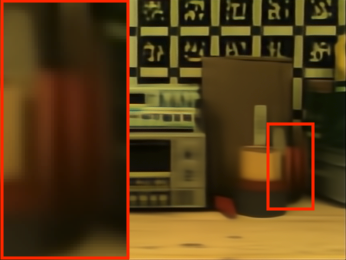

Additional visualization resutls on novel view synthesis in real-world dataset (Suppl)

EDI+GS

E2NeRF

Ev-DeblurNeRF

DiET-GS++ (Ours)

GT

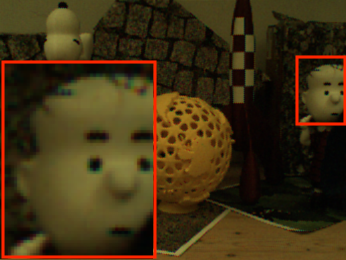

Additional visualization resutls on novel view synthesis in synthetic dataset (Suppl)

EDI+GS

E2NeRF

Ev-DeblurNeRF

DiET-GS++ (Ours)

GT

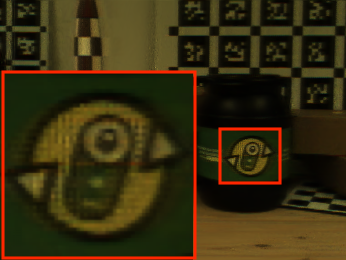

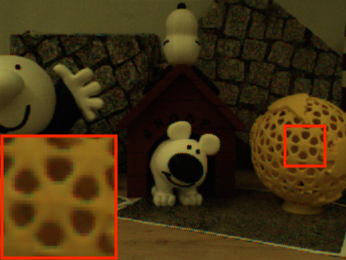

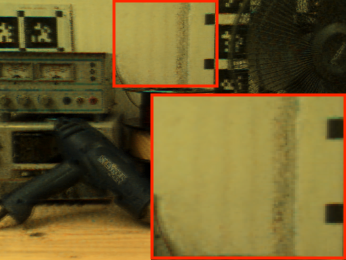

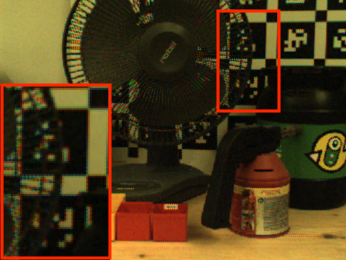

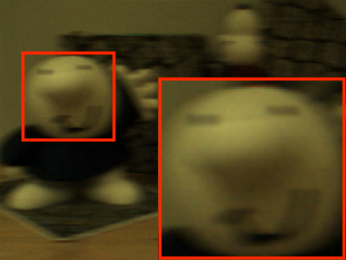

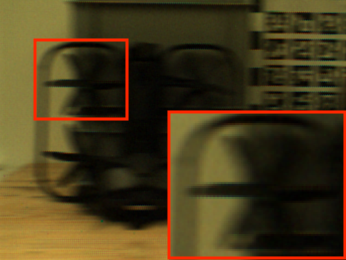

Here, we also present qualitative comparisons for single image deblurring. As shown in 2nd column, frame-based image deblurring method NAFNet often produces inaccurate details since it solely relies on blurry images to recover fine-grained details. EDI and BeNeRF recover more precise details, benefiting from the event-based cameras while severe artifacts are still exhibited. Our DiET-GS++ shows the best visual quality with cleaner and well-defined details by leveraging EDI and pretrained diffusion model as prior.

Blur Image

NAFNet

EDI

BeNeRF

DiET-GS++ (Ours)

@inproceedings{lee2025diet,

title={DiET-GS: Diffusion Prior and Event Stream-Assisted Motion Deblurring 3D Gaussian Splatting},

author={Lee, Seungjun and Lee, Gim Hee},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={21739--21749},

year={2025}

}